Image by Felipe Furtado

Note by:

Uzma Williams, MSc PhD

Uzma.Williams@concordia.ab.ca

JIC Book Review Editor

Evaluation and Measurement Coordinator

Sessional Psychology Instructor – Concordia University of Edmonton

In order to support evidence-based decision-making, a partnership between an interprofessional team and an evaluator (or evaluation team) is often formed to objectively measure changes that result from the implementation of a project or program. The goal of evaluation is to assess the efficacy of a program/project by reporting on both the negative and positive aspects of project work and work environment satisfaction. The evaluator often becomes a working member of the interprofessional team, and it is necessary for the interprofessional team to be vested in evaluation in order for the process and findings to be meaningful.

Evaluators develop a workplan to outline the activities occurring during the course of the evaluation, such as detailing the timelines of creating data collection tools, collecting data, conducting analyses, and reporting the outcomes. This workplan guides discussions of meetings and updates on the workload. The workplan adds organization and structure to the timeline of the evaluation to ensure deadlines are met and the evaluation is progressing as planned as well as ensuring good communication with the interprofessional team.

A vital document complimenting the workplan is the evaluation framework. This document follows the traditional tenets in program planning and evaluation. The evaluation framework outlines what is being measured and how it is being measured. The evaluation framework is shared with the interprofessional team to clearly communicate the evaluation activities in order to avoid disagreement regarding goals later on in the project – nonetheless, shifts in measurement goals are often necessary and encouraged when adaptations need to be made in order to ensure a successful evaluation. Typically, the interprofessional team identifies outcomes for measurement, which are then operationalized by the evaluator.

Operationalization of Performance Measures

Defining performance measures early in the project will ensure everyone has a clear understanding of what outcomes will be measured and how. Ensuring performance metrics are defined tangibly allows all involved team members to concretely visualize the outcomes measurement. This explication will prevent conflict at the stages of the initiative. Disagreement will be avoided on abstract measures and lines of communication will be improved because there will be agreement on the metrics being measured. For example, a success indicator for measuring care team satisfaction might initially be vaguely defined as “the changes the care team witnesses”. Misunderstandings will quickly become evident because of how this metric would be measured and indicative of success, so members of the interprofessional team will need to clarify the definition for indicating success of this measure, with the evaluator ensuring the measure can be adequately operationalized.

A Focus on Qualitative Inquiry in Health Settings

Note Keeping

There are many unforeseeable pitfalls of evaluation in a health care setting, especially with qualitative inquiry such as interviews or focus groups. For instance, the evaluator may have to wait for the interviewee or reschedule because interprofessional members working on a hospital unit may get too busy with work duties or may have no break coverage at the time. The evaluator may make notes of the unanticipated obstacles and how they were resolved, and this information can be included as additional notes in the evaluation report. One learned strategy, for example, is to ensure that there is an adequate amount of time between interviews. Another key strategy for success in qualitative health care evaluation is diligently following the key tenants of evaluation. The detailed description of the evaluation principles such as logic models and steps of the methodological procedures within the workplan ensure the stability of the initiative in three key ways: 1) interprofessional members learn key components and processes if they are unfamiliar with evaluation; 2) the project and evaluation can be continued without a large disruption if any team member leaves; 3) the project can be replicated in the future. The first point emphasizes the importance of assisting all project participants understand common language used in evaluation because these terms are referred to commonly throughout the initiative and evaluation, and these terms will appear again if the interprofessional member engages in future initiatives requiring evaluations.

Advancing Credibility through Good Judgement and Objectivity

Although human error is added into qualitative methods through coding and analysis, a large number of safeguards increase the quality and authenticity of evaluation initiatives. Investigators should always be aware that their values do not interfere with data interpretation (Patton, 1999). A strong degree of insight is required, and swaying the data to “what feels and sounds right” must be avoided. Even when there is a feeling to emphasize or deemphasize certain issues when coding data, an evaluator must work through the data with an objective mindset. The emphasis, regardless of the chosen methodological technique, is acquiring reliable results. If other investigators analyze the data using the same analytic technique, they should arrive at the same themes. Using a standardized methodology, such as grounded theory or interpretive phenomenology, is necessary for reliability and consistency, and the methodology should be adapted to what best will represent the perspectives of those who participated (Cutcliffe, 2000). Consultation with colleagues and the Project Team to ensure the accuracy of the findings provides strong credibility to the findings.

Employing a Mixed Methodology Approach

The use of qualitative methods to evaluate health care initiatives, in comparison to quantitative methods, is greatly disproportionate with only 6.18% of articles comprising qualitative reporting (Wisdom, Cavaleri, Onwuegbuzie, & Green, 2012). Real-world health care evaluations typically require investigators to use qualitative and quantitative methodologies to best report findings. The feasibility of time is a key factor in determining the data collection tools (e.g., surveys, administrative or patient data records, interviews, journals, focus groups, observation, etc). Ideally, quantitative measures are used to provide average perceptions of processes such as staff satisfaction whereas qualitative data provides in-depth, rich detail. The amount of change, for example increase or decrease in care team satisfaction or annual care team turnover, is captured by quantitative techniques; whereas, the rich commentary of how or why there has been fluctuations in care team satisfaction or turnover is best captured by a qualitative approach (Pope & Mays, 2006).

Communication to Stakeholders

Evaluators need to ensure that they correctly interpret the data in order to accurately represent experiences of the participants as this will have powerful implications on organizations. Similarly, when relaying the information back to the decision-makers such as senior management, the information provided can have detrimental implications for interprofessional staff. Therefore, it is the responsibility of the evaluator to ensure the evaluation reports are understood accurately by decision-makers. This accountability is part of an ethical safeguard within the scope of professional practice that applies to all evaluators engaged in health initiatives. Thoroughly explaining the methodology used to extract the results in an understandable description is necessary so both care providers and decision-makers can understand every step of data interpretation. Having reliable data in qualitative designs is achieved by minimizing sway from the obtained content. In other words, keeping words close to those of the participants within their respectable contexts increases reliability because the information is minimally changed. For the purpose of many evaluations, data needs to be aggregated so individuals or even the disciplines cannot be identified. Often, the methodology is documented for ensuring a standardized procedure is used throughout the analysis. The documentation process reinforces the reliability and validity of the evaluation to ensure the findings are credible. Interpreting the data accurately is a top priority for evaluators to ensure feelings, behaviours, and experiences of the interprofessional team were accurately relayed to the decision-makers. Especially in health care settings when there are vulnerable patients at risk, it is crucial to properly analyze and interpret results of qualitative evaluation because aggregate experiences are often times the only form of data analyses (Willig, 2013). The results shared with decision-makers impact a wide variety of dynamics such as allocating resources and services, changing the current environment, shaping future initiatives, and, ultimately, changing patient care protocols. The evaluator is also responsible and accountable for ensuring that decision-makers understand the data, including:

- limitations of data collection techniques

- limitations of analytic methodologies

- limitations of applications (e.g. inability to generalize or compare to other samples)

- pitfalls (e.g. inability to determine causation and draw inferences)

- quality of the evaluation

Relationships between the Interprofessional Team and Evaluation Team

One approach to enhancing the integrity of the evaluation is to create a strong relationship with the project team. Key features of developing healthy professional relationships consists of open communication, trust, respect, and diversity (Tallia, Lanham, McDaniel, & Crabtree, 2006) as well as integrity, kindness, and warmth. In order to obtain reliable and valid data, a strong meaningful connection and effective working relationship must be built with the interprofessional team to obtain clarity on received information and to understand the implications of the information. The rapport built with the evaluator and interprofessional team allows for an effective trusting relationship in order to ask the care team questions, understand responses of the care team, and relay those messages to the senior management team. Evaluation inquiry provides the ability to facilitate positive changes by working together to share a common language. Rather than just evaluating the impact of an initiative, the evaluator works with the interprofessional team to improve their environment by thoroughly understanding their experiences and making connections among work roles of interprofessional colleagues. Overseeing that feedback from the interprofessional team is properly documented and relayed to senior management bridges communication gaps, and as a result of credible evaluation, the interprofessional team is instilled with a sense of change and accomplishment.

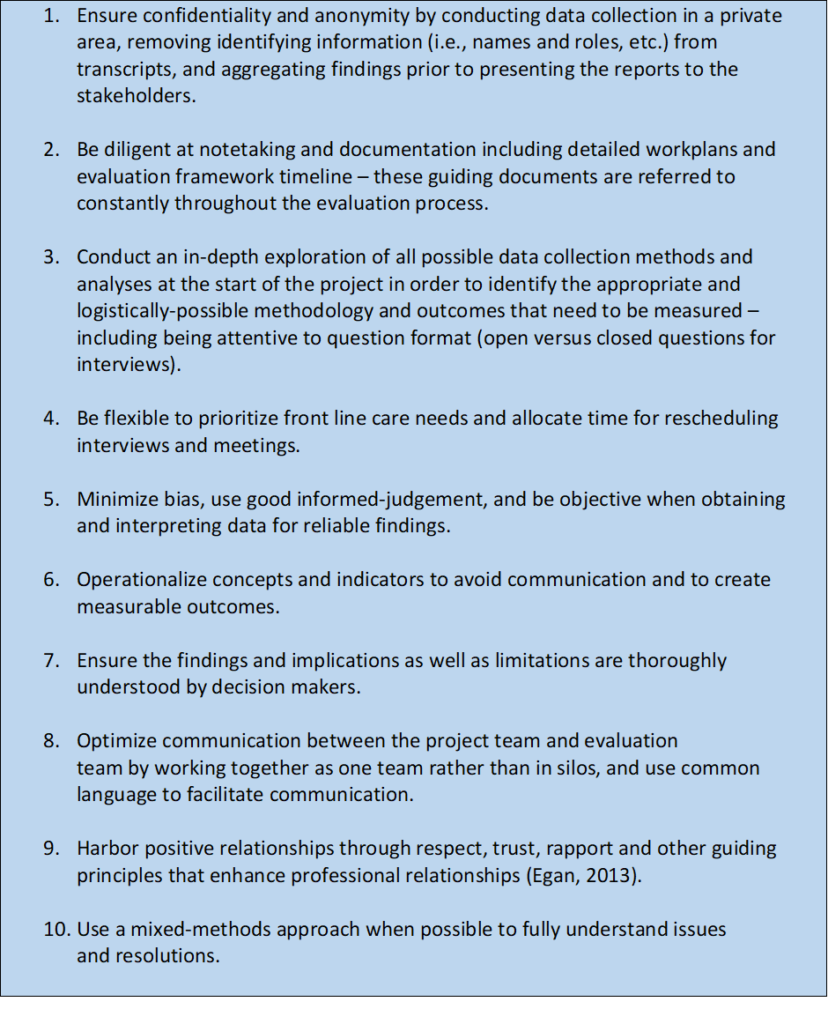

Lessons Learned from Health Care Evaluations and Working with Interprofessional Teams

References:

Cutcliffe, J. R. (2000). Methodological issues in grounded theory. Journal of Advanced Nursing, 31(6), 1476-1484.

Patton, M. Q. (1999). Enhancing the quality and credibility of qualitative analysis. Health Services Research, 34(5), 1189-1208.

Pope, C., & Mays, N. (Eds.). (2006). Qualitative research in health care (3rd ed.). Malden, MA: Blackwell Publishing Ltd.

Tallia, A. F., Lanham, H. J., McDaniel Jr, R. R., & Crabtree, B. F. (2006). Seven characteristics of successful work relationships. Family Practice Management, 13(1), 47.

Willig, C. (2013). Introducing qualitative research in psychology (3rd ed.). Berkshire, UK: Open University Press.

Wisdom, J. P., Cavaleri, M. A., Onwuegbuzie, A. J., & Green, C. A. (2012). Methodological reporting in qualitative, quantitative, and mixed methods health services research articles. Health Services Research, 47(2), 721-745